Abstract

A new AI-powered framework has been developed, offering groundbreaking capabilities for the real-time analysis of two hands engaged in manipulating an object.

A research team, led by Professor Seungryul Baek from the UNIST Artificial Intelligence Graduate School has introduced the Query-Optimized Real-Time Transformer (QORT-Former) framework, which accurately estimates the 3D poses of two hands and an object in real time. Unlike previous methods that require substantial computational resources, QORT-Former achieves exceptional efficiency while maintaining state-of-the-art accuracy.

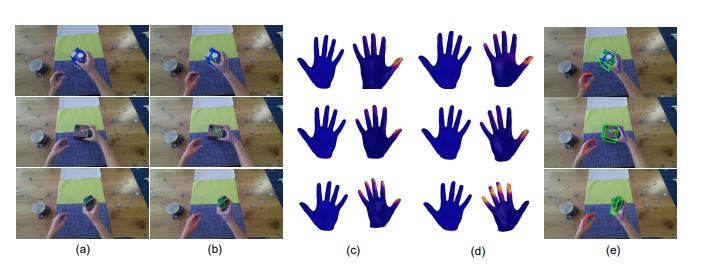

Figure 1. Examples of estimated 3D poses on H2O dataset: For a separate example in each row, the figure represents (a) input RGB image, (b) our hand-object queries, (c) ground-truth contact map, (d) predicted contact map, and (e) final 3D pose estimation results, respectively

Figure 1. Examples of estimated 3D poses on H2O dataset: For a separate example in each row, the figure represents (a) input RGB image, (b) our hand-object queries, (c) ground-truth contact map, (d) predicted contact map, and (e) final 3D pose estimation results, respectively

To optimize performance, the team proposed a novel query division strategy that enhances query features by leveraging contact information between the hands and the object, in conjunction with a three-step feature update within the transformer decoder. With only 108 queries and a single decoder, QORT-Former achieves 53.5 frames per second (FPS) on an RTX 3090 Ti GPU, making it the fastest known model for hand-object pose estimation.

Professor Seungryul Baek stated, "QORT-Former represents a significant advancement in the understanding of hand-object interactions." He further noted, "It not only enables real-time applications in augmented reality (AR), virtual reality (VR), and robotics, but also pushes the boundaries of real-time AI models."

"Our work demonstrates that efficiency and accuracy can be optimized simultaneously," Co-first author Khalequzzaman Sayem remarked. "We anticipate broader adoption of our method in fields that require real-time hand-object interaction analysis."

The study has been accepted to the 39th Annual Conference on Artificial Intelligence (AAAI) 2025, one of the world's most prestigious academic conferences in the field of artificial intelligence. It has received support from the Ministry of Science and ICT (MSIT), the AI Center, and CJ Corporation.

Journal Reference

Elkhan Ismayilzada, MD Khalequzzaman Chowdhury Sayem, Yihalem Yimolal Tiruneh, et al., "QORT-Former: Query-optimized Real-time Transformer for Understanding Two Hands Manipulating Objects," in Proc. of Annual AAAI Conference on Artificial Intelligence (AAAI), Pennsylvania, USA, (2025).