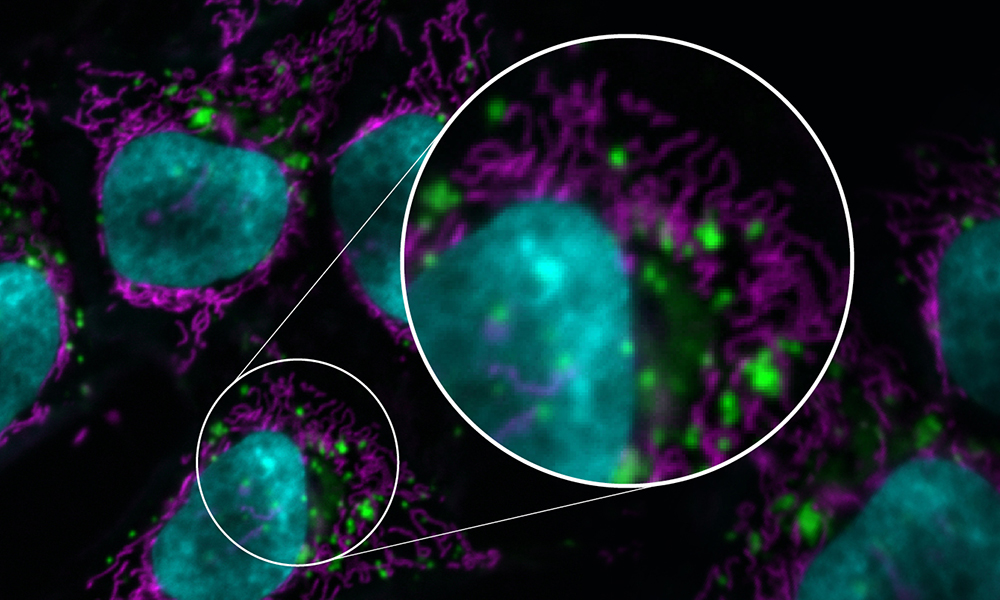

A false-color image of HeLa cells at a resolution of 0.0515 x 0.0515 µm² depicting the nucleus in cyan, mitochondria in magenta, and lysosomes in green-an example of how to properly represent data in an image. (Helena Jambor and Christopher Schmied)

Images created by a plethora of high-tech instruments are widely found in scientific research as both illustrations and sources of data. Recent advancements in light (or optical) microscopy in particular have enabled sensitive, fast, and high-resolution imaging of diverse samples, making image use in scientific papers more popular than ever.

And yet there are no common standards for the publication of images. This causes major snags in an essential element of the scientific process: reproducibility. Any researcher looking to replicate the results of a study without full information on how images key to those results were produced has an impossible task before them.

"A lot of imaging scientists around the world have been very concerned by the reproducibility crisis," says North, senior director of the Bio-Imaging Resource Center (BIRC) at Rockefeller University. "People publish so little information about how they acquire their images and how they analyze them."

That's why an international consortium of experts, including North and BIRC image analyst Ved Sharma, recently put together easy-to-follow guidelines for publishing images and image analyses born of their collective knowledge of best practices. These guidelines were recently published in an open-access study in Nature Methods.

The guidelines were assembled in a two-year project involving dozens of imaging scientists from QUAREP-LiMi (Quality Assessment and Reproducibility for Instruments & Images in Light Microscopy), a group that includes 554 members from 39 countries.

They include practical checklists for scientists to follow with the goal of publishing fully understandable and interpretable images.

Each checklist is divided into three levels: minimal, which describes essential requirements for reproducible images; recommended, which bolsters image comprehensibility; and ideal, which includes top-tier best practices.

For example, the checklist includes standards for formatting, color handling, and annotation. Indicating the origin of an inset from an image is minimal; providing intensity scales for grayscale, color, and pseudocolor is recommended; and annotating image details such as pixel size and exposure time are ideal.

North says, "We advise everyone to publish their images in black and white rather than color, because your eye is much more sensitive to details in monochrome. Many investigators like color images because they're pretty and they look impressive. But they don't realize they're actually throwing away a lot of information."

The image-analysis checklists cover three different kinds of workflows: established, new, and machine-learning. Citing each step is a minimal requirement of an established workflow, for example, while providing a screen recording of or tutorial for a new workflow is ideal.

This is especially relevant because NIH-funded researchers now have to include data management protocols to meet the requirements of the new NIH data management policy, North says. "People have been saying, 'What are we supposed to write in that?' This paper gives them those guidelines."

That scientists have a clearly articulated image-analysis workflow is important for scientific journals as well; as the primary disseminators of scientific knowledge, journals have a vested interest in ensuring that the papers they publish are transparent about how results were produced. To that end, journal editors took part in discussion with the study authors.

The guidelines can only increase accessibility to the data in a paper, Sharma says. "There is so much information that could be included for each image in a paper, but most of the time it's not available, or the reader has to dig deep into the paper to find out where the information is in order to make sense of the image," Sharma says. "If scientists start adopting even the minimal standard for image publication, reproducibility would be so much easier for everyone."