Jülich, 15 August 2022 - Researchers at Forschungszentrum Jülich have simulated a neural network with 300 million synapses at an unprecedented level of speed. They succeeded in calculating the activity four times quicker than in real time. The researchers used a prototype of the IBM INC-3000 "neural" supercomputer to achieve this record-breaking speed. The overall goal is to gain a better understanding of learning processes and brain development. In the following interview, Dr. Arne Heittmann gives an insight into their work.

Machine learning and artificial intelligence (AI) have recently made enormous progress. However, the computational effort involved is enormous. Neural networks are typically trained on supercomputers. The amount of time required to train machine learning systems doubles roughly every three months. If this trend continues, it will no longer be economically viable to perform certain learning tasks in the conventional way using common algorithms and hardware.

A promising solution in this regard is novel computer architectures that resemble the brain in their structure. The biological model is miles ahead of technical systems in terms of performance and efficiency. Such neuromorphic computers could also make an important contribution to better understanding biological learning dynamics, as they have very little in common with the algorithms used to date for machine learning. The specific fundamental principles according to which they work are still largely unknown.

For some time now, Jülich researchers have been aiming to develop a neuromorphic computer of this kind. Such a computer should enable neural networks to be simulated much faster, a feat that cannot be achieved on conventional supercomputers - even when taking future technological improvements into account. In pursuit of this aim, engineers from Forschungszentrum Jülich have now broken a new speed record. Dr. Arne Heittmann from the JARA Institute Green IT (PGI-10) talks in an interview about the work.

Dr. Arne Heittmann, what kind of network did you simulate?

It's a network, which, in terms of size, corresponds to roughly 1 cubic millimetre of the cerebral cortex. It consists of 80,000 neurons, which are interconnected via 300 million synapses. Such a network does not yet have a learning function. It is instead intended to represent a realistic interconnect model and produces just enough stimulus to arrive at biorealistic activity patterns. The network practically keeps itself busy - it is also referred to as a recurrent network that generates its own activity patterns from the activity patterns it produces. This network has been thoroughly investigated and is therefore ideal for comparing the performance of computer architectures in terms of the simulation of neural networks. However, the network only encompasses roughly one millionth of a human brain and is therefore far too small to make reliable statements about the brain.

Why do conventional computers have difficulties in simulating learning processes?

Biological brains have completely different structures to computer systems that are used, for example, in high-performance computing (HPC). While in classical supercomputers only relatively few high-speed processors perform calculations with a strict separation of memory units and processing units, the brain is comprised of a massive number of highly interconnected neurons. Although each individual neuron works extremely slowly and thus in an energy-saving manner, our brain is nevertheless extremely powerful since the neurons work completely in parallel. In addition, the level of interconnection in the brain is extremely high. The connections between neurons alone make up 80 % of brain volume. In contrast, the neurons themselves only account for 16-20 %.

Another fundamental problem of all conventional computer architectures is the strict separation between processor and memory. Biological neurons, however, are able to both process and store information. In conventional computer systems, all information must be transported via a narrow interface from the processor to a memory system. This is known as the Von Neumann bottleneck and it leads to a certain delay, or latency. This latency will not improve significantly, even with new memory technologies, and plays a crucial role in determining the simulation speed, as our investigations showed. Communication between neurons is also highly energy-efficient and occurs almost exclusively via extremely sparse neural activity patterns. Applied to our supercomputer, this means that you are exclusively dealing with very short data packets. However, the overriding computer standards are optimized specifically for large data packets. This also leads to large latencies for short data packets during communication.

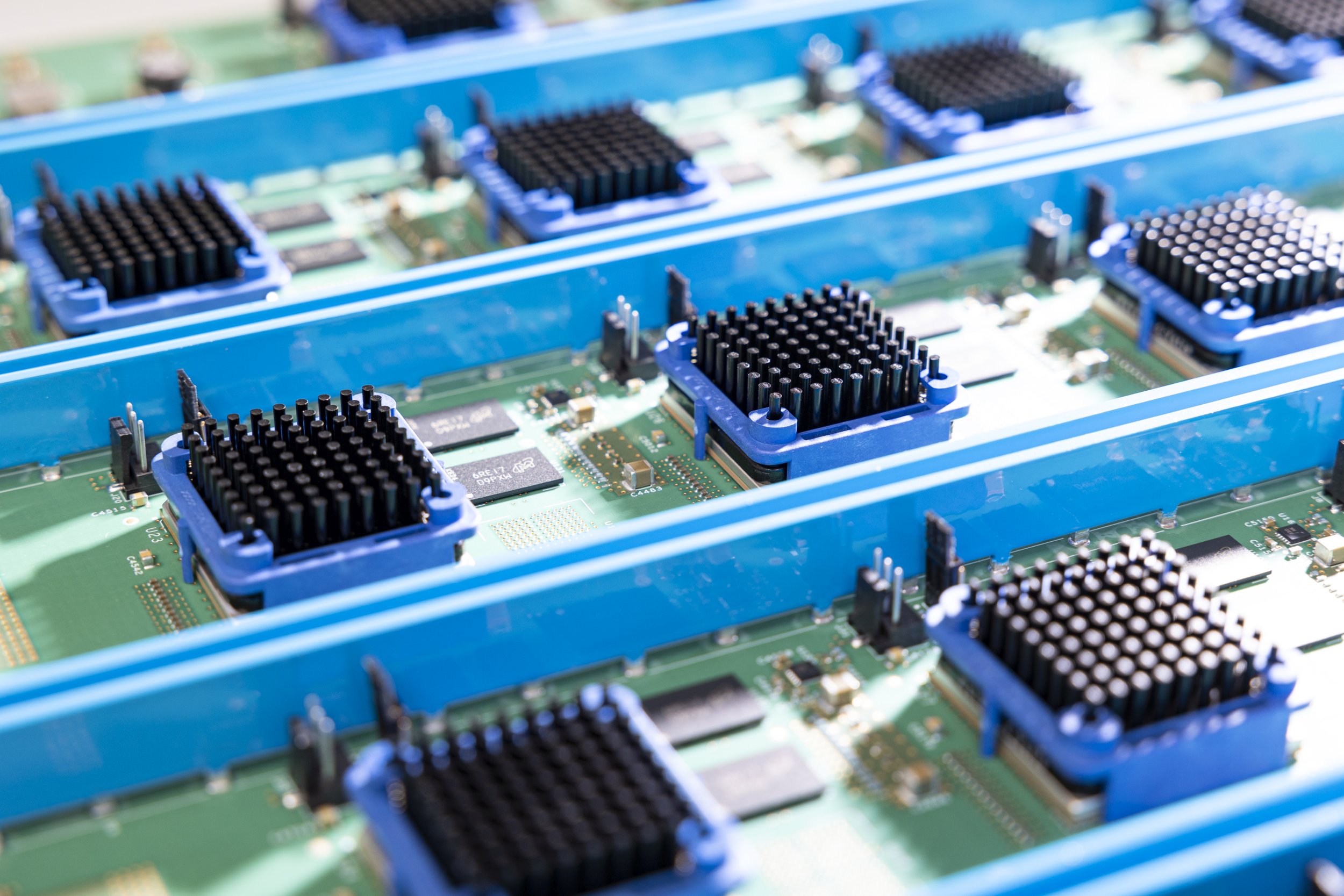

You simulated a network with 300 million synapses with unprecedented speed using an FPGA-based supercomputer from IBM. What kind of system is this? Is it a suitable solution for the future?

We primarily use IBM's INC system because it is capable of producing different circuits very flexibly. FPGAs contain freely programmable logic blocks with their own memory and other hardware components on a chip. As the memory is placed so close to the logic gate, the aforementioned memory latencies are of no consequence. The blocks on the FPGA can also be reprogrammed as required. This is helpful, for instance, for investigating various architecture variants. As we are not always able to clearly identify the optimal architecture and circuit in advance, the ability to quickly reconfigure helps us to develop good solutions. We were thus able to find the optimal circuit, which enabled the above-mentioned speed-up factor.

Even for networks that are currently being investigated to model the visual cortex, for instance, and that only comprise of several million neurons, we will be unable to maintain the record simulation speed on the IBM INC system. On a conventional supercomputer, such a network can only be simulated just 30 times slower than in real time.

You also have to consider that with current simulations, only very simple neuron and synapse modes are being used that can only be described using a small number of mathematical equations. However, this neuron model is more than 120 years old. It is conceivable that a more realistic depiction of neural dynamics and learning processes requires many more equations, for example to describe the dendrites. The latter are fine branches through which the neurons are interconnected. Today we know that these dendrites are not passive connections but that they are actively involved in information processing. It is estimated that more than two thirds of neural dynamics is dependent on dendrites. However, these dendritic structures are not currently taken into account at all. In future, considerably more computing units will be required than are currently available in a processor in order to solve the large number of coupled equations in an acceptable amount of time.

Other neuromorphic computer systems such as the University of Manchester's SpiNNaker architecture or Heidelberg University's BrainScaleS system are also used for neural simulations. What are the various advantages and disadvantages of the systems?

In principle, the systems are faster the more directly they depict a neural network on the hardware level. Heidelberg's BrainScaleS system consists of multiple wafers. The neurons and their connections are placed directly on the chip. This system is very quick by natural design. However, the size of network that can be depicted using this system is limited. It would not be possible to simulate 300 million synapses, as we achieved for our simulation. Another problem with this kind of special architecture is that under certain circumstances you don't have enough flexibility to integrate new features.

The British system SpiNNaker, in contrast, is similar to a conventional supercomputer. It is a massively parallel computer with over several hundred thousand ARM processors - which are actually optimized for mobile communication applications - and a special communications network. As with all conventional computers it features a central processor and a separate memory, meaning that you automatically run into problems in terms of latency, i.e. the delayed transportation of information.

And what about graphics processing units (GPUs), which are also intensively used for artificial intelligence? Are they not ultimately considered to be related application cases?

At present, GPUs are predominantly used for classical neural networks; a realistic depiction of biological networks is not usually the main aim here. They are mainly concerned with deep learning algorithms. In GPU-based systems, the degree of parallelism is extremely high. This means that they feature a comparatively high number of processing units. But even in these systems, data need to be transported back and forth between the processor and the memory. Compared with the biological brain, GPUs also continue to consume an extremely high amount of energy. A human brain has a capacity of roughly 20 watts. In contrast, typical server farms with GPU-based compute nodes have an output of several megawatts, thus requiring a hundred thousand times more energy in operation.

The IBM supercomputer you used for your simulation was just a test system. Which final system are you considering?

In the project entitled "Advanced Computing Architectures (ACA): towards multi-scale natural-density Neuromorphic Computing", we are working on identifying concepts that we require to calculate large and very complex networks. Such a concept would involve a network with 1 billion neurons, which is as big as a mammalian brain. The project sees us cooperating with RWTH Aachen University, the University of Manchester, and Heidelberg University, some of which are pursuing their own neuromorphic approaches. The overarching goal of the project is to build a computer system that enables a time-lapse analysis of learning processes. IBM's INC-3000 system was used as part of a feasibility study and showed that even when using state-of-the-art technologies, there are fundamental limits to the performance gains that can be achieved. New and unconventional architectures, circuit concepts, and memory concepts are therefore essential. As indicated above, concepts for memory architecture, communication, and numerical calculation units need to be completely rethought.

The long-term goal is to develop an architecture that is specifically tailored to this kind of simulation. Such a neuromorphic accelerator architecture would then ideally be coupled to the Jülich supercomputing infrastructure. But we're talking about a very long-term time frame here of about 15 to 20 years.

- Interview: Tobias Schlößer -

Original publication: Arne Heittmann, Georgia Psychou, Guido Trensch, Charles E. Cox, Winfried W. Wilcke, Markus Diesmann and Tobias G. Noll

Simulating the Cortical Microcircuit Significantly Faster Than Real Time on the IBM INC-3000 Neural Supercomputer, Front. Neurosci. (published on 20 January 2022), DOI:10.3389/fnins.2021.728460