Abstract

A technology that enables precise 3D motion with simple text input, without the need for complex initial settings, has been developed by Professor Seungryul Baek and his research team in Artificial Intelligence Graduate School at UNIST. Known as Text2HOI, this technology allows for interaction between hands and objects based on text input into the prompt window, paving the way for advancements in the commercialization of the 3D virtual reality field.

Text2HOI is capable of executing actions such as holding and interacting with objects through text commands. Its applications are vast, spanning across various industries, including virtual reality (VR), robotics, and medical care, offering easy usability due to its streamlined setup process.

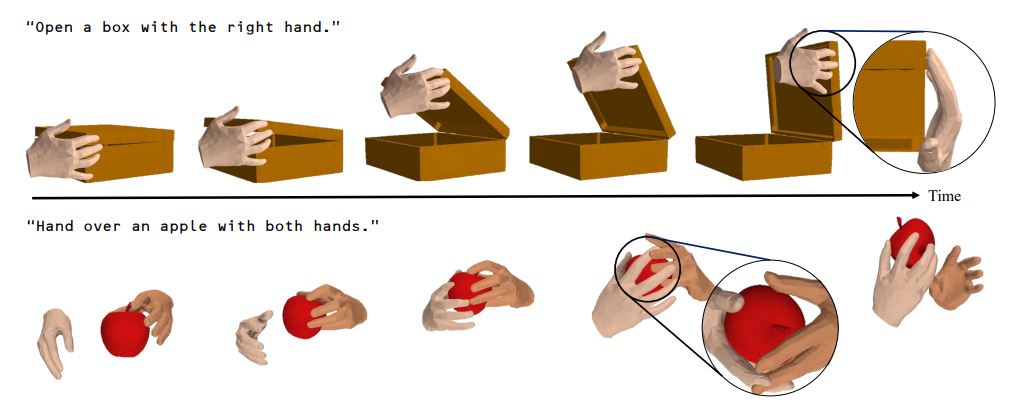

By analyzing the user-input text, Text2HOI predicts the contact points related to hand motions in response to the command object. For instance, a command to "Hand over an apple with both hands" will probabilistically calculate the likely contact point between the hands and the apple. This enables precise hand motions when picking up the apple, adjusting hand position and angle based on the apple's size and shape.

Figure 1. Given a text and a canonical object mesh as prompts, the research team generated 3D motion for hand-object interaction without requiring object trajectory and initial hand pose. They represented the right hand with a light skin color and the left hand with a dark skin color. The articulation of a box in the first row is controlled by estimating an angle for the pre-defined axis of the box.

Figure 1. Given a text and a canonical object mesh as prompts, the research team generated 3D motion for hand-object interaction without requiring object trajectory and initial hand pose. They represented the right hand with a light skin color and the left hand with a dark skin color. The articulation of a box in the first row is controlled by estimating an angle for the pre-defined axis of the box.

The versatility of this technology allows for its integration into diverse sectors, from simulating medical procedures to controlling character behavior in games and virtual reality, as well as facilitating complex scientific experiments virtually. In the realm of robotics, Text2HOI opens up possibilities for natural interactions with robots through accurate hand motion control.

Professor Baek expressed his optimism about the broad applications for Text2HOI, noting its potential for advancing virtual reality (VR/AR), robotics, and medical fields. He emphasized a commitment to continuing research that benefits society.

First author Junuk Cha highlighted the potential for Text2HOI to serve as a fundamental tool in linking text prompts with interactive hand and object motions, thereby promoting further research in this area.

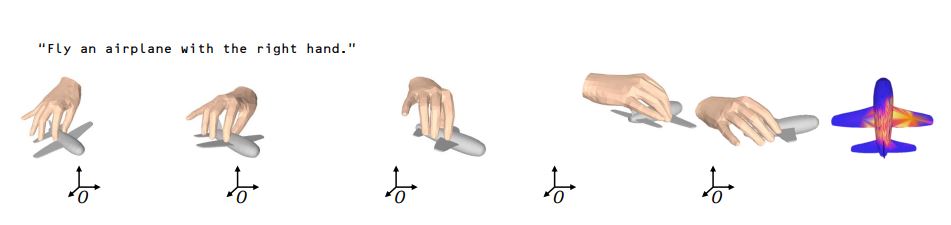

Figure 2. The research team demonstrated the generated hand-object motions and the predicted contact map results. It shows the results with objects seen during training.

Figure 2. The research team demonstrated the generated hand-object motions and the predicted contact map results. It shows the results with objects seen during training.

The findings of this research were published in the online version of the Conference on Computer Vision and Pattern Recognition on June 17, 2024. This study was supported by the Ministry of Science and ICT (IITP), the National Research Foundation of Korea (NRF), the Ministry of Science and ICT (MSIT), the Korea Institute of Maritime Science and Technology Promotion (KIMST), and the CJ Enterprise AI Center.

Journal Reference

Junuk Cha, Jihyeon Kim, Jae Shin Yoon, and Seungryul Baek, "Text2HOI: Text-guided 3DMotion Generation for Hand-Object Interaction," CVPR, (2024).