A groundbreaking technology that combines satellite data and numerical model data for forest fire detection has been developed, offering a more comprehensive and adaptable approach to monitor and respond to wildfires. This innovative solution, developed by Professor Jungho Im and his team in the Department of Civil, Urban, Earth, and Environmental Engineering at UNIST, has the potential to significantly minimize the damage caused by medium and large forest fires.

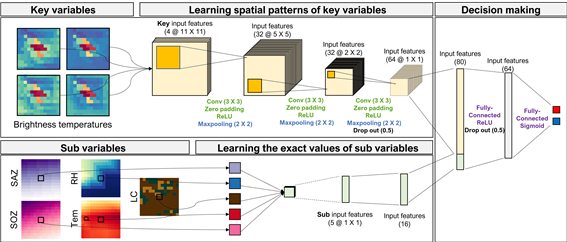

Traditional wildfire detection systems have relied solely on satellite data for over two decades. However, the research team led by Professor Im sought to enhance the existing approach by integrating numerical model data used in weather forecasting. By combining various data, including relative humidity, surface temperature, and satellite observation angle, the team developed a deep learning model with a dual-module convolutional neural network (DM CNN) structure to independently extract and combine satellite and numerical model data.

Figure 1. Schematic image, illustrating the dual-module convolutional neural network (DM CNN) structure.

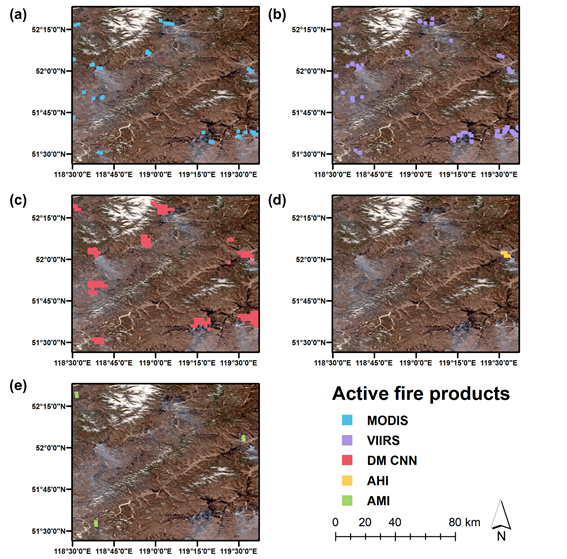

The developed technology was compared to widely used detection technologies such as MODIS/VIIRS, AHI, and AMI. Existing methods struggle to accurately detect forest fires due to mixed signals caused by factors like humidity and sun position. In contrast, the model developed by Professor Im's team considers multiple variables simultaneously, providing a significant advantage in maintaining detection accuracy despite changes in the environment.

Real-world experiments were conducted to validate the technology's performance under various environmental conditions. The results demonstrated that the developed model outperformed existing detection methods, showcasing its ability to more accurately locate wildfires. Although the satellite resolution is lower compared to narrow-range detection technologies, the wider spatial range covered by the model (4㎢) compensates for this by offering higher accuracy.

"This study maximizes the advantages of deep learning, enabling the convergence of heterogeneous data with diverse characteristics," stated Professor Im. "It represents a significant achievement in proposing a new direction for global forest fire detection technology."

Figure 2. Spatial distribution of active fire products from (a) MODIS, (b) VIIRS, (c) dual-module convolutional neural network (DM CNN), (d) AHI, and (e) AMI in EA 2. The background image is the RGB composite from Sentinel-2 Level 2A surface reflectance product S2MSI2A on April 10, 2020, 03:15 UTC. DM CNN, AHI, and AMI were acquired on April 10, 2020, 03:10 UTC. MODIS and VIIRS were acquired on April 10, 2020, 03:26 UTC and April 10, 2020, 04:06 UTC, respectively.

The study has been carried out by Professor Jungho Im, as the corresponding author, with co-first authors Dr. Yoojin Kang and Taejun Sung from the Department of of Civil, Urban, Earth, and Environmental Engineering at UNIST. The research has been supported by the National Research Foundation of Korea, by the Korea Meteorological Administration Research and Development Program, by 'Satellite Information Application' project of the Korea Aerospace Research Institute (KARI), and by Korea Environment Industry & Technology Institute (KEITI) through Project for developing an observation-based GHG emissions geospatial information map, funded by Korea Ministry of Environment (MOE). Their findings have been published in the online version of the journal, Remote Sensing of Environment (RSE) on September 15, 2023.

This breakthrough in forest fire detection technology brings us closer to a more effective and proactive approach in monitoring and responding to wildfires. By leveraging the fusion of satellite and numerical model data, this innovative solution has the potential to revolutionize the field of wildfire management and protect vulnerable ecosystems and communities.

Journal Reference

Yoojin Kang, Taejun Sung, Jungho Im, "Toward an adaptable deep-learning model for satellite-based wildfire monitoring with consideration of environmental conditions," Remote Sens. Environ., (2023).