The assistant professor of computer science develops technology to help kids benefit from and learn about artificial intelligence.

Can artificial intelligence-powered tools help enrich child development and learning?

That question is the crux of a series of research projects led by Zhen Bai, an assistant professor of computer science at the University of Rochester and the Biggar Family Fellow in Data Science at the Goergen Institute for Data Science. From tools to help parents of deaf and hard-of-hearing (DHH) children learn American Sign Language (ASL) to interactive games that demystify machine learning, Bai aims to help children benefit from AI and understand how it is impacting them.

Bai, an expert in human-computer interaction, believes that, despite all the concern and angst about AI, the technology has tremendous potential for good. She believes children are especially primed to benefit.

"Over the years, I've seen how kids get interested whenever we present technology like a conversational agent," says Bai. "I feel like it would be a missed opportunity if we don't prepare the next generation to know more about AI so they can feel empowered in using the technology and are informed about the ethical issues surrounding it."

Minimizing language deprivation in deaf and hard-of-hearing children

During one of Bai's earliest experiences at the University, she met a key collaborator who led her to a new avenue of research. At a new faculty orientation breakfast, she happened to sit next to Wyatte Hall, a Deaf researcher and assistant professor at the University of Rochester Medical Center's Department of Public Health Services. The two bonded over a shared interest in childhood development and learning.

Hall explained some of the unique challenges children who are deaf and hard-of-hearing face in cognitive and social development. More than 90 percent of DHH children are born to hearing parents, and often the very first deaf person that parents meet is their own baby. In early human development, there's a neurocritical period of language acquisition-approximately the first five years of a child's life-in which children need to acquire a first language foundation. Having parents who do not know a signed language, and the limits of technology such as the cochlear implant and hearing aids, increases the risk of DHH children experiencing negative developmental outcomes associated with language deprivation.

"I learned a lot from Dr. Hall about this concept of language deprivation and became fascinated with the idea of how technology could play a role to make life easier," says Bai. "I wanted to explore how to help facilitate this very intimate bonding from day one between parents and their kids."

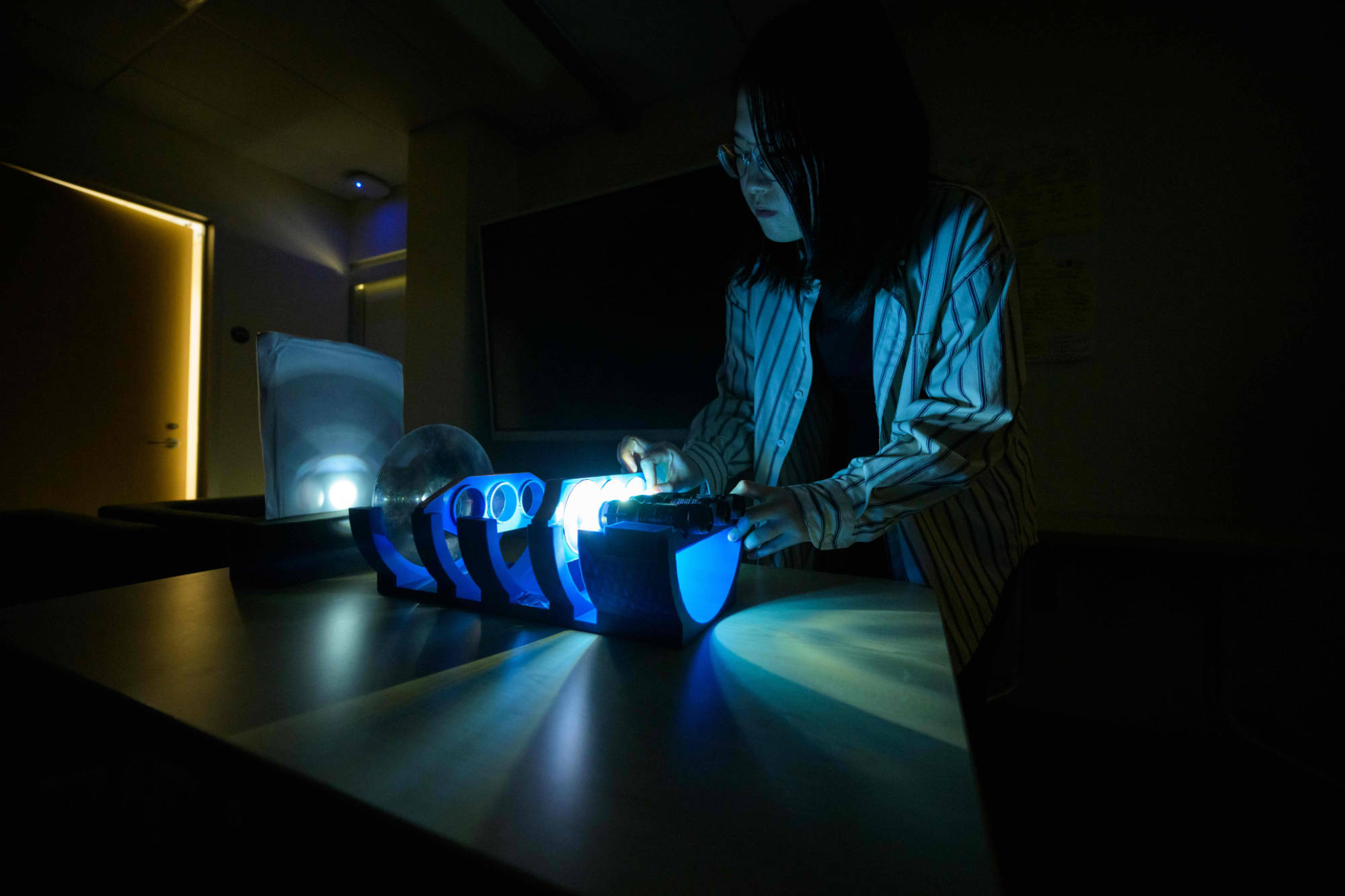

Bai and Hall began collaborating on a project called the Tabletop Interactive Play System (TIPS) to help parents learn ASL in a natural setting. The system uses a camera and microphone to observe the parent and child interacting, and then uses a projector to present videos of relevant signs retrieved via artificial intelligence from multiple ASL libraries.

In addition to a tabletop version, Bai has been developing versions for tablets, smart watches, and smart glasses, together with her team of undergraduate and graduate students with backgrounds in computer science, data science, and neuroscience. She has also collaborated with student fellows from the Rochester Bridges to the Doctorate program and other researchers from the Deaf community such as Athena Willis, a scholar in the Rochester Postdoctoral Partnership from the University's Department of Neuroscience.

Rochester, reportedly home to the country's largest population of DHH people per capita, is a uniquely rich setting for researching assistive technologies for the Deaf community. Hall says Bai's willingness to learn from and collaborate with the Deaf community has helped improve the effectiveness of the tool.

"Often we've seen hearing people, hearing researchers become involved in Deaf-related things, they learn something interesting about Deaf people and want to run with it for their own work. Even with the best of intentions, that can go awry very quickly if they are not collaborating with Deaf people and the community at all or in the right way," says Hall. "My experience with Dr. Bai, though, she really started with a good foundation and kept collaborating with me in a very positive way, so it's been a great partnership from the very beginning."

Demystifying machine learning

As AI provides more recommendations to kids about the books they read, shows they watch, or toys they buy, Bai wants to provide learning opportunities so kids can use the technology and understand how it works to make it less of a "black box." She earned a prestigious Faculty Early Career Development (CAREER) award from the National Science Foundation to develop technologies that help K-12 students demystify machine learning, an integral aspect of current approaches to AI.

Partnering with researchers from the Department of Computer Science, including Albert Arendt Hopeman Professor Jiebo Luo, and from the Warner School of Education-including Frederica Warner Professor Raffaella Borasi, Associate Professor Michael Daley, and Associate Professor April Luehmann-her team developed visualization tools that help K-12 students and their teachers use machine learning to make sense of data and pursue scientific discovery, even if they do not have programming skills.

Bai has been piloting the web-based tool her team developed, GroupIt, with K-12 teachers to see how she can help the next generation make sense of big data. She says working with teachers has been crucial because they are on the frontlines of helping children make sense of AI.

"Teachers play such a critical role in integrating AI education in the STEM classroom, but it's so new for them both technologically and pedagogically," says Bai. "We want to empower teachers with easy-to-use tools so they can create more authentic learning activities that integrate data into their classroom, whether they're teaching hard sciences or social sciences."

To help K-12 students understand how AI is affecting them, Bai and her students also developed an augmented reality game. The game uses bee-pollinating flowers as an analogy for AI-powered recommendation systems, illustrating how the preference selection process works. Called BeeTrap, the game shows how choosing to pollinate certain types of flowers can reduce the overall biodiversity of the flowers in the environment.

"BeeTrap explains the mechanism that makes recommendations more or less relevant and diverse to a person," says Bai. "The goal is to help children realize the value of information and how things are being selectively recommended to people based on previous choices they made and other personal information."

Bai says this is especially important for marginalized groups, who can be impacted by inherent biases in AI systems related to race, ethnicity, gender, and other factors. Bai has introduced the BeeTrap game to students in various summer camps including the Upward Bound pre-college program by the David T. Kearns Center at the University, and the Freedom Scholars Learning Center in the city of Rochester.

The team is also creating more tangible representations of AI. Her group created OptiDot, a 3D-printed optical device that shows how AI might suggest different food choices based on your preference for sweet or salty snacks or fatty or healthy options.

Ultimately, Bai thinks it will take a multifaceted approach to help students harness the power of AI, but she is excited to develop tools that can help them get there.

"There is a lot more work to be done to improve the learning experiences and make AI accessible and relatable for students," says Bai. "We're happy to play a role in helping to make that happen."